Scheme of information transmission over a communication channel. Technical information transmission systems. Communication channels

Information transfer is a term that combines many physical processes of information movement in space. In any of these processes, components such as a source and receiver of data, a physical medium of information and a channel (medium) of its transmission are involved.

Information transfer process

The initial repositories of data are various messages transmitted from their sources to receivers. Information transmission channels are located between them. Special technical devices-converters (encoders) form physical data carriers - signals - based on the content of messages. The latter undergo a number of transformations, including encoding, compression, modulation, and then sent to the communication lines. Having passed through them, the signals undergo inverse transformations, including demodulation, unpacking and decoding, as a result of which the original messages perceived by the receivers are extracted from them.

Information messages

A message is a kind of description of a phenomenon or an object, expressed as a collection of data that has signs of a beginning and an end. Some messages, such as speech and music, are continuous functions of sound pressure time. In telegraph communication, a message is the text of a telegram in the form of an alphanumeric sequence. A television message is a sequence of frame messages that are "seen" by a television camera lens and captures them at a frame rate. The overwhelming majority of messages transmitted recently through information transmission systems are numerical arrays, text, graphic, as well as audio and video files.

Information signals

Information transmission is possible if it has a physical medium, the characteristics of which change depending on the content of the transmitted message so that they overcome the transmission channel with minimal distortion and can be recognized by the receiver. These changes in the physical storage medium form an information signal.

Today, information is transmitted and processed using electrical signals in wired and radio communication channels, as well as thanks to optical signals in fiber-optic communication lines.

Analog and digital signals

A well-known example of an analog signal, i.e. continuously changing in time, is the voltage removed from the microphone, which carries a voice or musical information message. It can be amplified and transmitted via wired channels to the sound reproduction systems of the concert hall, which will carry speech and music from the stage to the audience in the gallery.

If, in accordance with the magnitude of the voltage at the output of the microphone, the amplitude or frequency of high-frequency electrical oscillations in the radio transmitter is continuously changed in time, then an analog radio signal can be transmitted over the air. A television transmitter in an analog television system generates an analog signal in the form of a voltage proportional to the current brightness of the image elements perceived by the camera lens.

However, if the analog voltage from the microphone output is passed through a digital-to-analog converter (DAC), then its output will no longer be a continuous function of time, but a sequence of readings of this voltage taken at regular intervals with a sampling frequency. In addition, the DAC also performs quantization according to the level of the initial voltage, replacing the entire possible range of its values \u200b\u200bwith a finite set of values \u200b\u200bdetermined by the number of binary bits of its output code. It turns out that a continuous physical quantity (in this case, this voltage) turns into a sequence of digital codes (is digitized), and then, already in digital form, can be stored, processed and transmitted through information transmission networks. This significantly increases the speed and noise immunity of such processes.

Communication channels

Usually, this term refers to the complexes of technical means involved in transferring data from the source to the receiver, as well as the environment between them. The structure of such a channel, using typical means of information transmission, is represented by the following sequence of transformations:

AI - PS - (CI) - CC - M - LPI - DM - DK - CI - PS

AI is a source of information: a person or other living being, book, document, image on a non-electronic medium (canvas, paper), etc.

PS - converter of information message into information signal, performing the first stage of data transmission. Microphones, television and video cameras, scanners, faxes, PC keyboards, etc. can act as PS.

CI is an information encoder in an information signal for reducing the volume (compression) of information in order to increase its transmission speed or reduce the frequency band required for transmission. This link is optional, as shown in parentheses.

KK is a channel encoder to increase the noise immunity of the information signal.

M - signal modulator for changing the characteristics of intermediate carrier signals, depending on the size of the information signal. A typical example is amplitude modulation of a carrier signal of a high carrier frequency, depending on the magnitude of the low frequency information signal.

LPI is an information transmission line representing a combination of a physical medium (for example, an electromagnetic field) and technical means for changing its state in order to transmit a carrier signal to a receiver.

DM is a demodulator for separating the information signal from the carrier signal. Present only if M.

DC - channel decoder for detecting and / or correcting errors in the information signal that have arisen on the LPI. Present only with QC.

CI - information decoder. Present only if CI is present.

PI - receiver of information (computer, printer, display, etc.).

If the transmission of information is two-way (duplex channel), then on both sides of the LPI there are modem blocks (Modulator-DEModulator), combining M and DM links, as well as codec blocks (COder-DECoder), combining encoders (CI and CK) and decoders (DI and DK).

Characteristics of transmission channels

The main distinguishing features of the channels are bandwidth and noise immunity.

In the channel, the information signal is exposed to noise and interference. They can be caused by natural causes (for example, atmospheric for radio channels) or be specially created by the enemy.

The noise immunity of transmission channels is increased by using various kinds of analog and digital filters to separate information signals from noise, as well as special message transmission methods that minimize the effect of noise. One of these methods is to add extra characters that do not carry useful content, but help control the correctness of the message, as well as correct errors in it.

The channel capacity is equal to the maximum number of binary symbols (kbits) transmitted by it in the absence of interference in one second. For various channels, it varies from a few kbit / s to hundreds of Mbit / s and is determined by their physical properties.

Information transfer theory

Claude Shannon is the author of a special theory of encoding transmitted data, who discovered methods of dealing with noise. One of the main ideas of this theory is the need for redundancy of the digital code transmitted over information transmission lines. This makes it possible to restore the loss if some part of the code is lost during its transmission. Such codes (digital information signals) are called anti-jamming codes. However, the redundancy of the code cannot be made too great. This leads to the fact that the transmission of information is delayed, as well as to the rise in the cost of communication systems.

Digital signal processing

Another important component of the theory of information transmission is a system of methods for digital signal processing in transmission channels. These methods include algorithms for digitizing the original analog information signals with a certain sampling rate determined on the basis of Shannon's theorem, as well as methods for forming noise-immune carrier signals on their basis for transmission over communication lines and digital filtering of received signals in order to separate them from interference.

Today, information is spreading so quickly that there is not always enough time to comprehend it. Most people rarely think about how and by what means it is transmitted, and even more so they do not imagine the scheme of information transmission.

Basic concepts

The transfer of information is considered to be the physical process of moving data (signs and symbols) in space. From the point of view of data transmission, this is a pre-planned, technically equipped event for the movement of information units in a set time from the so-called source to the receiver through an information channel, or a data transmission channel.

Data transmission channel - a set of means or medium for data distribution. In other words, this is the part of the information transmission scheme that ensures the movement of information from the source to the recipient, and under certain conditions and back.

There are many classifications of data transmission channels. If we single out the main ones, then we can list the following: radio channels, optical, acoustic or wireless, wired.

Technical channels of information transmission

Directly to technical data transmission channels are radio channels, fiber optic channels and cable. The cable can be coaxial or twisted pair. The first is an electrical cable with a copper wire inside, and the second is a twisted pair of copper wires, insulated in pairs, in a dielectric sheath. These cables are quite flexible and easy to use. Optical fiber consists of fiber optic filaments that transmit light signals through reflection.

The main characteristics are bandwidth and noise immunity. Throughput is usually understood to mean the amount of information that can be transmitted over the channel in a certain time. And noise immunity is the parameter of channel resistance to external interference (noise).

Understanding Data Transfer

If you do not specify the area of \u200b\u200bapplication, the general scheme of information transmission looks simple, it includes three components: "source", "receiver" and "transmission channel".

Shannon's scheme

Claude Shannon, an American mathematician and engineer, was at the forefront of information theory. He proposed a scheme for transmitting information through technical communication channels.

It is not difficult to understand this scheme. Especially if you imagine its elements in the form of familiar objects and phenomena. For example, a source of information is a person on the phone. The handset will be an encoder that converts speech or sound waves into electrical signals. The data transmission channel in this case is the communication centers, in general, the entire telephone network leading from one telephone set to another. The subscriber's handset acts as a decoder. It converts the electrical signal back into sound, that is, into speech.

In this diagram of the information transfer process, the data is represented as a continuous electrical signal. This connection is called analog.

Coding concept

Coding is considered to be the transformation of information sent by a source into a form suitable for transmission over the communication channel used. The clearest example of coding is Morse code. In it, information is converted into a sequence of dots and dashes, that is, short and long signals. The receiving party must decode this sequence.

Modern technologies use digital communication. In it, information is converted (encoded) into binary data, that is, 0 and 1. There is even a binary alphabet. This relationship is called discrete.

Interference in information channels

There is also noise in the data transmission scheme. The concept of "noise" in this case means interference, due to which there is a distortion of the signal and, as a result, its loss. There are various reasons for the interference. For example, information channels can be poorly protected from each other. To prevent interference, various technical protection methods, filters, shielding, etc. are used.

K. Shannon developed and proposed to use the theory of coding to combat noise. The idea is that if information is lost under the influence of noise, then the transmitted data must be redundant, but at the same time not enough to reduce the transmission rate.

In digital communication channels, information is divided into parts - packets, for each of which a checksum is calculated. This amount is sent with every packet. The receiver of information recalculates this amount and accepts the packet only if it matches the original one. Otherwise, the package is sent again. And so on until the sent and received checksums match.

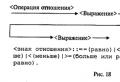

The information transfer process is shown schematically in the figure. This assumes that there is a source and recipient of information. The message from the source to the recipient is transmitted through a communication channel (information channel).

Figure: 3. - The process of transferring information

In such a process, information is presented and transmitted in the form of a sequence of signals, symbols, signs. For example, during a direct conversation between people, sound signals are transmitted - speech, while reading a text, a person perceives letters - graphic symbols. The transmitted sequence is called a message. From the source to the receiver, a message is transmitted through a certain material medium (sound is acoustic waves in the atmosphere, image is light electromagnetic waves). If technical means of communication are used in the transmission process, then they are called communication channels(information channels). These include telephone, radio, television.

We can say that the human sense organs play the role of biological information channels. With their help, the informational impact on a person is conveyed to memory.

Claude Shannon, a diagram of the process of transmitting information through technical communication channels was proposed, presented in the figure.

Figure: 4. - The process of transferring information according to Shannon

The work of such a scheme can be explained in the process of talking on the phone. The source of information is the speaking person. The encoder is the telephone handset microphone, with the help of which sound waves (speech) are converted into electrical signals. The communication channel is the telephone network (wires, switches of telephone nodes through which the signal passes)). The decoding device is a telephone receiver (earpiece) of a listening person - an information receiver. Here, the incoming electrical signal turns into sound.

Communication in which transmission is in the form of a continuous electrical signal is called analog communication.

Under codingany transformation of information coming from a source into a form suitable for its transmission over a communication channel is understood.

Currently, digital communication is widely used, when the transmitted information is encoded into binary form (0 and 1 are binary digits), and then decoded into text, image, sound. Digital communications are discrete.

The term "noise" refers to all kinds of interference that distorts the transmitted signal and leads to loss of information. Such interference, first of all, arises for technical reasons: poor quality of communication lines, insecurity from each other of various streams of information transmitted over the same channels. In such cases, noise protection is required.

First of all, technical methods of protecting communication channels from the effects of noise are used. For example, using a shield cable instead of a bare wire; the use of various kinds of filters that separate the useful signal from noise, etc.

Claude Shannon developed a special coding theory that provides methods for dealing with noise. One of the important ideas of this theory is that the code transmitted over the communication line must be redundant. Due to this, the loss of some part of the information during transmission can be compensated.

However, you cannot make the redundancy too large. This will lead to delays and higher communication costs. K. Shannon's coding theory just allows you to get such a code that will be optimal. In this case, the redundancy of the transmitted information will be the minimum possible, and the reliability of the received information will be maximum.

In modern digital communication systems, the following technique is often used to combat the loss of information during transmission. The entire message is split into chunks - blocks. For each block, a checksum is calculated (the sum of binary digits), which is transmitted along with this block. At the receiving point, the checksum of the received block is recalculated, and if it does not match the original, then the transmission of this block is repeated. This will continue until the initial and final checksums match.

Information transfer rateIs the informational volume of a message transmitted per unit of time. Information flow rate units: bit / s, byte / s, etc.

Technical data communication lines (telephone lines, radio communication, fiber-optic cable) have a data rate limit called bandwidth of the information channel... The transfer rate limits are physical.

We represent the process of transferring information by means of a model in the form of a diagram shown in Figure 3.

Figure: 3. Generalized model of information transmission system

Consider the main elements that make up this model, as well as the information transformations that occur in it.

1. Source of information or message (AI) is a material object or subject of information capable of accumulating, storing, transforming and issuing information in the form of messages or signals of various physical nature. This can be a computer keyboard, a person, an analog output from a video camera, etc.

We will consider two types of information sources: if in a finite time interval the information source creates a finite set of messages, it is discrete , otherwise - continuous ... We will dwell in more detail on the consideration of sources in the next lesson.

Information in the form of an initial message from the output of the information source enters the input of the encoder, which includes the source encoder (CI) and the channel encoder (CK).

2. Coder.

2.1. Source coder provides conversion of a message into a primary signal - a set of elementary symbols .

Note that a code is a universal way of displaying information during its storage, transmission and processing in the form of a system of unambiguous correspondences between message elements and signals, with the help of which these elements can be fixed. Encoding can always be reduced to an unambiguous conversion of characters from one alphabet to characters from another. Moreover, the code is a rule, a law, an algorithm according to which this transformation is carried out.

The code is a complete set of all possible combinations of symbols of the secondary alphabet, built according to this law. Combinations of characters belonging to a given code are called by code words ... In each specific case, all or part of the code layers belonging to this code can be used. Moreover, there are "powerful codes", all combinations of which are almost impossible to display. Therefore, by the word "code" we mean, first of all, the law according to which the transformation is carried out, as a result of which we obtain code words, the full set of which belongs to this code, and not to some other, built according to a different law.

The symbols of the secondary alphabet, regardless of the basis of the code, are only carriers of messages. In this case, the message is the letter of the primary alphabet, regardless of the specific physical or semantic content that it reflects.

Thus, the goal of the source coder is to present information in the most compact form. This is necessary in order to efficiently use the resources of the communication channel or storage device. In more detail, the issues of coding sources will be discussed in topic number 3.

2.2. Channel encoder. When information is transmitted over a communication channel with interference, errors may occur in the received data. If such errors are small or rarely occur, the information can be used by the consumer. With a large number of errors, the information received cannot be used.

Channel coding, or noise-immune coding, is a method of processing transmitted data that provides decrease number of errorsarising during transmission over a noisy channel.

At the output of the channel encoder, as a result, a sequence of code symbols is formed, called code sequence . In more detail, the issues of channel coding will be discussed in topic 5, as well as in the course "Theory of electrical communication".

It should be noted that both error-correcting coding and data compression are not mandatory operations when transmitting information. These procedures (and their corresponding blocks in the structural diagram) may be missing. However, this can lead to very significant losses in the noise immunity of the system, a significant decrease in the transmission rate and a decrease in the quality of information transmission. Therefore, almost all modern systems (with the exception, perhaps, of the simplest ones) must include and must include effective and noise-immune data coding.

3. Modulator. If it is necessary to transmit messages to the symbols of the secondary alphabet, specific physical qualitative characteristics are assigned. The process of influencing an encoded message in order to turn it into a signal is called modulation ... Modulator functions - message negotiation source or code sequences generated by the encoder, with communication line properties and enabling the simultaneous transmission of a large number of messages over a common communication channel.

Therefore, the modulator must convert messages source or their corresponding code sequences in signals, (superimpose messages on signals), the properties of which would provide them with the possibility of efficient transmission over existing communication channels. In this case, the signals belonging to a plurality of information transmission systems operating, for example, in a common radio channel, must be such as to ensure independent transmission of messages from all sources to all recipients of information. Various modulation methods are studied in detail in the course "Theory of electrical communication".

We can say that the appointment encoder and modulator is the coordination of the information source with the communication line.

4. Communication line is the environment in which information-carrying signals propagate. The communication channel and the communication line should not be confused. Link - a set of technical means designed to transfer information from a source to a recipient.

Depending on the propagation medium, there are radio channels, wire, fiber-optic, acoustic, etc. channels. There are many models describing communication channels with a greater or lesser degree of detail, however, in the general case, a signal passing through a communication channel undergoes attenuation, acquires a certain time delay (or phase shift), and is noisy.

To increase the throughput of communication lines, messages from several sources can be transmitted over them simultaneously. This technique is called sealing... In this case, messages from each source are transmitted through their own communication channel, although they have a common communication line.

Mathematical models of communication channels will be considered in the course "Theory of electrical communication". The information characteristics of communication channels will be discussed in detail within the framework of our discipline when studying topic number 4.

5. Demodulator . The received (reproduced) message generally differs from the sent one due to the presence of interference. The received message will be called the rating (meaning the rating of the message).

To reproduce the message estimate, the system receiver must first by accepted hesitation and taking into account the information about used in the transfer signal form and modulation method get an estimate of the code sequence, called adopted sequence... This procedure is called demodulation, detection or signal reception... In this case, demodulation should be performed in such a way that the received sequence differs to a minimum from the transmitted code sequence. Optimal signal reception in radio engineering systems is the subject of the TPP course.

6. Decoder.

6.1. Channel decoder... In general, the received sequences may differ from the transmitted codewords, that is, contain errors. The number of such errors depends on the level of interference in the communication channel, the transmission rate chosen for signal transmission and the modulation method, as well as on the reception (demodulation) method. Channel decoder task - to detect and, if possible, fix these errors. The procedure for detecting and correcting errors in the received sequence is called channel decoding . The result of decoding is the evaluation of the information sequence. The choice of the error-correcting code, the coding method, and also the decoding method should be made so that as few uncorrected errors as possible remain at the output of the channel decoder.

The issues of error-correcting coding / decoding in information transmission (and storage) systems are currently being given exceptional attention, since this technique can significantly improve the quality of its transmission. In many cases, when the requirements for the reliability of the received information are very high (in computer data transmission networks, in remote control systems, etc.), transmission without error-correcting coding is generally impossible.

6.2. Source decoder... Since the source information in the process of transmission was subjected to coding for the purpose of its more compact (or more convenient) representation ( data compression, economical coding, source coding), it is necessary to restore it to its original (or almost original form) according to the adopted sequence. The recovery procedure is called source decoding and can be either just the reverse of the encoding operation (non-destructive encoding / decoding), or to restore an approximate value of the original information. The restoration operation will also include the restoration, if necessary, of a continuous function over a set of discrete values \u200b\u200bof estimates.

It must be said that recently, economical coding has taken an increasingly prominent place in information transmission systems, since, together with error-correcting coding, it turned out to be the most effective way to increase the speed and quality of its transmission.

7.Recipient of information - a material object or subject that perceives information in all forms of its manifestation for the purpose of its further processing and use.

Recipients of information can be both people and technical means that accumulate, store, transform, transmit or receive information.

Information transfer scheme. Information transmission channel. Information transfer rate.

There are three types of information processes: storage, transmission, processing.

Data storage:

· Information carriers.

· Types of memory.

· Information storage.

· Basic properties of information storages.

The following concepts are associated with information storage: information carrier (memory), internal memory, external memory, information storage.

A storage medium is a physical medium that directly stores information. Human memory can be called random access memory. Learned knowledge is reproduced by a person instantly. We can also call our own memory internal memory, since its carrier - the brain - is inside us.

All other types of information carriers can be called external (in relation to a person): wood, papyrus, paper, etc. Information storage is information organized in a certain way on external media intended for long-term storage and permanent use (for example, document archives, libraries, card indexes). The main information unit of the repository is a certain physical document: a questionnaire, a book, etc. The organization of a repository is understood as the presence of a certain structure, i.e. orderliness, classification of stored documents for the convenience of working with them. The main properties of information storage: the amount of stored information, storage reliability, access time (i.e., the time to search for the necessary information), information security.

Information stored on computer memory devices is commonly called data. Organized data storages on external memory devices of a computer are usually called databases and data banks.

Data processing:

· General diagram of the information processing process.

· Statement of the processing task.

· Executor of processing.

· Algorithm of processing.

· Typical tasks of information processing.

Information processing scheme:

Initial information - processing executor - summary information.

In the process of information processing, a certain information problem is solved, which can be preliminarily stated in the traditional form: a certain set of initial data is given, and some results are required. The very process of moving from the initial data to the result is the processing process. The object or subject performing the processing is called the executor of the processing.

For the successful execution of information processing, the executor (person or device) must know the processing algorithm, i.e. sequence of actions that must be performed in order to achieve the desired result.

There are two types of information processing. The first type of processing: processing associated with obtaining new information, new content of knowledge (solving mathematical problems, analyzing the situation, etc.). The second type of processing: processing associated with changing the form, but not changing the content (for example, translating text from one language to another).

An important type of information processing is coding - the transformation of information into a symbolic form, convenient for its storage, transmission, processing. Coding is actively used in technical means of working with information (telegraph, radio, computers). Another type of information processing is data structuring (adding a certain order to the information storage, classification, cataloging of data).

Another type of information processing is the search in a certain information storage for the necessary data that satisfy certain search conditions (query). The search algorithm depends on how the information is organized.

Transfer of information:

· Source and receiver of information.

· Information channels.

· The role of the sense organs in the process of human perception of information.

· The structure of technical communication systems.

· What is encoding and decoding.

· The concept of noise; noise protection techniques.

· Information transfer rate and channel bandwidth.

Information transfer scheme:

Information source - information channel - information receiver.

Information is presented and transmitted in the form of a sequence of signals, symbols. From the source to the receiver, a message is transmitted through some material medium. If technical means of communication are used in the transmission process, then they are called information transmission channels (information channels). These include telephone, radio, TV. The human senses play the role of biological information channels.

The process of transferring information through technical communication channels follows the following scheme (according to Shannon):

The term "noise" refers to various kinds of interference that distort the transmitted signal and lead to loss of information. Such interference, first of all, occurs due to technical reasons: poor quality of communication lines, insecurity from each other of various streams of information transmitted over the same channels. To protect against noise, various methods are used, for example, the use of various kinds of filters that separate the useful signal from the noise.

Claude Shannon developed a special coding theory that provides methods for dealing with noise. One of the important ideas of this theory is that the code transmitted over the communication line must be redundant. Due to this, the loss of some part of the information during transmission can be compensated. However, you cannot make the redundancy too large. This will lead to delays and higher communication costs.

When discussing the topic of measuring the speed of information transmission, one can draw on the reception of analogy. Analog - the process of pumping water through water pipes. Here, pipes are the channel for transferring water. The intensity (speed) of this process is characterized by water consumption, i.e. the number of liters pumped per unit of time. In the process of transmitting information, the channels are technical communication lines. By analogy with the water supply system, we can talk about the information flow transmitted through the channels. Information transfer rate is the information volume of a message transmitted per unit of time. Therefore, the units of measurement of the information flow rate: bit / s, byte / s, etc. information process transmission channel

Another concept - the capacity of information channels - can also be explained with the help of the "water-supply" analogy. You can increase the flow of water through the pipes by increasing the pressure. But this path is not endless. If too much pressure is applied, the pipe may burst. Therefore, the limiting water consumption, which can be called the throughput of the water supply. Technical data communication lines have a similar limit of data transmission rate. The reasons for this are also physical.

1. Classification and characteristics of the communication channel

Link

Is a collection of means for transmitting signals (messages).

To analyze information processes in a communication channel, you can use its generalized diagram shown in Fig. 1.

| AI |

| LS |

| P |

| PI |

| P |

|

In fig. 1 the following designations are adopted: X, Y, Z, W - signals, messages ; f- interference; LS- communication line; AI, PI - source and receiver of information; P - converters (coding, modulation, decoding, demodulation).

There are different types of channels that can be classified according to different criteria:

1. By the type of communication lines:

wired; cable; fiber optic;

power lines; radio channels, etc.

2... By the nature of the signals:

continuous; discrete; discrete-continuous (signals at the input of the system are discrete, and at the output are continuous, and vice versa).

3... For noise immunity:

channels without interference; with interference.

Communication channels are characterized by:

1. Channel capacity

defined as the product of the channel usage time T to, bandwidth of frequencies transmitted by the channel F toand dynamic range D to ... , which characterizes the channel's ability to transmit different signal levels

V to \u003d T to F to D to.(1)

Condition for matching the signal with the channel:

V c £ V k ;

T c £ T k ;

F c £ F k ;

V c £ V k ;

D c £ D k.

2.Information transfer rate

- the average amount of information transmitted per unit of time.

3.

4. Redundancy -

ensures the reliability of the transmitted information ( R \u003d 0¸1).

One of the tasks of information theory is to determine the dependence of the information transmission rate and communication channel capacity on the channel parameters and characteristics of signals and interference.

The communication channel can be figuratively compared to roads. Narrow roads - low traffic, but cheap. Wide roads are good traffic but expensive. The bandwidth is determined by the "bottleneck".

The data transfer rate largely depends on the transmission medium in the communication channels, which are various types of communication lines.

Wired:

1. Wired- twisted pair (which partially suppresses electromagnetic radiation from other sources). Transfer rates up to 1 Mbps. Used in telephone networks and for data transmission.

2. Coaxial cable.Transmission speed 10-100 Mbps - used in local networks, cable TV, etc.

3... Fiber optic.The transmission rate is 1 Gbps.

In environments 1–3, the attenuation in dB is linear with distance, i.e. the power drops exponentially. Therefore, after a certain distance it is necessary to install regenerators (amplifiers).

Radio lines:

1. Radio channel.The transmission speed is 100-400 Kbps. Uses radio frequencies up to 1000 MHz. Up to 30 MHz, due to reflection from the ionosphere, electromagnetic waves can propagate beyond the line of sight. But this range is very noisy (for example, amateur radio). From 30 to 1000 MHz - the ionosphere is transparent and line of sight is required. Antennas are installed at a height (sometimes regenerators are installed). Used in radio and television.

2. Microwave lines.Transfer rates up to 1 Gbps. Radio frequencies above 1000 MHz are used. This requires line-of-sight and highly directional parabolic antennas. The distance between the regenerators is 10–200 km. Used for telephony, television and data transmission.

3. Satellite connection... Microwave frequencies are used, and the satellite serves as a regenerator (and for many stations). The characteristics are the same as for microwave lines.

2. Bandwidth of a discrete communication channel

A discrete channel is a collection of means for transmitting discrete signals.

Communication channel bandwidth

- the highest theoretically achievable information transfer rate, provided that the error does not exceed a given value. Information transfer rate

- the average amount of information transmitted per unit of time. Let us define expressions for calculating the information transfer rate and the bandwidth of the discrete communication channel.

When each symbol is transmitted, on average, the amount of information passes through the communication channel, determined by the formula

I (Y, X) \u003d I (X, Y) \u003d H (X) - H (X / Y) \u003d H (Y) - H (Y / X), (2)

Where: I (Y, X) -mutual information, i.e. the amount of information contained in Yrelatively X; H (X) - entropy of the message source; H (X / Y) - conditional entropy, which determines the loss of information per symbol associated with the presence of noise and distortion.

When sending a message X Tduration T, composed of n elementary symbols, the average amount of transmitted information, taking into account the symmetry of the mutual amount of information, is equal to:

I (Y T, X T) \u003d H (X T) - H (X T / Y T) \u003d H (Y T) - H (Y T / X T) \u003d n. (4)

The information transfer rate depends on the statistical properties of the source, the encoding method and the properties of the channel.

Discrete communication channel bandwidth  . (5)

. (5)

The maximum possible value, i.e. the maximum of the functional is sought over the entire set of probability distribution functions p (x).

The throughput depends on the technical characteristics of the channel (speed of the equipment, type of modulation, level of interference and distortion, etc.). The units for measuring the channel capacity are:,,,.

2.1 Discrete communication channel without interference

If there is no interference in the communication channel, then the input and output signals of the channel are linked by an unambiguous, functional relationship.

In this case, the conditional entropy is zero, and the unconditional entropies of the source and receiver are equal, i.e. the average amount of information in the received symbol relative to the transmitted one is

I (X, Y) \u003d H (X) \u003d H (Y); H (X / Y) \u003d 0.

If a X T - the number of characters per time T, then the information transfer rate for a discrete communication channel without interference is

(6)

Where V \u003d 1 / - average bit rate of one symbol.

Bandwidth for a discrete communication channel without interference ![]() (7)

(7)

Because the maximum entropy corresponds for equiprobable symbols, then the capacity for uniform distribution and statistical independence of the transmitted symbols is: ![]() . (8)

. (8)

Shannon's first channel theorem: If the information flow generated by the source is close enough to the bandwidth of the communication channel, i.e.

![]() , where is an arbitrarily small quantity,

, where is an arbitrarily small quantity,

then you can always find such a coding method that will ensure the transmission of all messages from the source, and the information transfer rate will be very close to the channel capacity.

The theorem does not answer the question of how to carry out the coding.

Example 1. The source generates 3 messages with probabilities:

p 1 \u003d 0.1; p 2 \u003d 0.2 and p 3 \u003d 0.7.

Messages are independent and are transmitted in a uniform binary code ( m \u003d 2) with a symbol duration of 1 ms. Determine the speed of information transmission over the communication channel without interference.

Decision: The entropy of the source is

[bit / s].

To transmit 3 messages with a uniform code, two digits are required, while the duration of the code combination is 2t.

Average signal rate

V \u003d 1/2t = 500 .

Information transfer rate

C \u003d vH \u003d 500 x 1.16 \u003d 580 [bit / s].

2.2 Discrete communication channel with interference

We will consider discrete communication channels without memory.

Channel without memory

A channel is called in which each transmitted signal symbol is affected by interference, regardless of which signals were transmitted earlier. That is, interference does not create additional correlations between symbols. The name "without memory" means that during the next transmission, the channel does not seem to remember the results of previous transmissions.

In the presence of interference, the average amount of information in the received message symbol - Y, relative to the transmitted - X equally:

.

For message symbol X T duration T,consisting of n elementary symbols the average amount of information in the received message symbol - Y T relative to the transmitted - X T equally:

I (Y T, X T) \u003d H (X T) - H (X T / Y T) \u003d H (Y T) - H (Y T / X T) \u003d n \u003d 2320 bit / s

The throughput of a continuous noisy channel is determined by the formula

=2322 bps.

Let us prove that the information capacity of a continuous channel without memory with additive Gaussian noise under the limitation on the peak power is not more than the information capacity of the same channel with the same value of the limitation on the average power.

The expected value for a symmetric uniform distribution

Mean square for symmetrical uniform distribution

Variance for symmetrical uniform distribution

Moreover, for a uniformly distributed process.

Differential entropy of a signal with uniform distribution

.

The difference between the differential entropies of a normal and uniformly distributed process does not depend on the variance

= 0.3 bit / sample

Thus, the throughput and capacity of the communication channel for the process with normal distribution is higher than for the uniform one.

Determine the capacity (volume) of the communication channel

V k \u003d T k C k \u003d 10 × 60 × 2322 \u003d 1.3932 Mbit.

Let's determine the amount of information that can be transmitted in 10 minutes of channel operation  10×

60×

2322\u003d 1.3932 Mbps.

10×

60×

2322\u003d 1.3932 Mbps.

Tasks